About

Hello! I’m interested in compilers, programming languages, and performance.

I work on the NVHPC C, C++ and Fortran compilers, the LLVM Flang Fortran compiler, and Python JIT compilers at NVIDIA.

These are some of my favorite posts:

Prior to working at NVIDIA, I optimized scientific applications at Pacific Northwest National Laboratory by leveraging different kinds of parallelism on CPUs and GPUs. Prior to that, I was a Data Science intern at Micron in the D.C. metro area.

You can also find me here:

These views do not in any way represent those of NVIDIA or any other organization or institution that I am professionally associated with. These views are entirely my own.Orality, Literacy, and Code

1/1/2026

Walter Ong, in Orality and Literacy (Ong82), argues that the medium of thought profoundly shapes how we think. Ong focuses on the transition from oral cultures to literate ones. While he later considered digital media, computer programs were not central to Orality and Literacy; programming languages are not considered at all:

Although the full relationship of the electronically procesed word to the orality-literacy polarity with which this book concerns itself is too vast a subject to be considered in its totality here, some few points need to be made…

Programming languages are of course a small subset of the electronically processed word, but this was the first place my mind went to. When we consider the impact of literature on human history and how comparatively brief of a time computers have been around, I have to imagine the full scope of the impact of programming languages on human cognition has yet to be imagined. At first, I thought programming languages either sit outside Ong’s oral/literate spectrum or are simply another form of literature entirely. I now believe that they do belong on the same spectrum, but are not wholly subsumed by literature. I begin by examining some notable contrasts between orality and literacy before attempting to extend Ong’s analysis to programming languages.

We will call people that primarily speak and do not know how to read or write primarily oral (or just oral) people, and those raised with literacy literate people. This is not a value judgment.

Links are at the end of the series of posts.

Orality and Literacy

If you’ve already read Orality and Literacy, this post might not be useful. This post reviews content from the book that will be relevant to the applications to programming languages later on.

Cognitive Technologies

Consider an oral person with a novel idea. They want to spread this idea to as many people as possible. What are their options? They could either:

- go to far-away places and speak in front of many people, or

- teach it to people who will spread it by word-of-mouth.

There is no way for them to record their thoughts and send them elsewhere; nothing remains of the thought except the traces in the minds of those who heard it. The thought exists as sound and event; it survives only as it is shared. Oral thought is inherently social, contextual, and temporary.

Ong notes that oral cultures rely on formulaic devices (like cliches, narratives, rhymes and rituals) to more reliably transmit ideas. These are cognitive technologies which extend memory and enable transmission. Literate people use different technologies (like line breaks, paragraphs, and mathematical notation) to preserve and organize thought.

What looks like dull or onerous repetition in the oral arts (Homeric hexameter, oral histories, mnemonic formulas) is better interpreted as technology applicable to a particular medium of communication rather than evidence of intellectual inferiority. A scribe might use dark ink on sturdy paper to ensure their ideas are accurately transmitted to someone in another country. So too do oral artists leverage the technology at their disposal to communicate as clearly as possible. Translation is difficult; tools useful in one medium may be useless, strange, or redundant in another.

The Medium Restructures Consciousness

Central to Ong’s thesis is that the means of communication change cognition. Before applying Ong’s analysis to programming languages, we need to understand how orality and literacy structure cognition differently.

Ong avoided using the term “medium” because it encourages readers to consider communication to work like this:

- Some one has a thought they would like to communicate.

- They encode that thought into a little nugget capable of being transmitted across a medium (like speech or literature).

- They transmit that nugget to another person, who

- decodes the nugget and downloads the thought into their brain.

Thinking of a ‘medium’ of communication or of ‘media’ of communication suggests that communication is a pipeline transfer of units of material called ‘information’ from one place to another… This model obviously has something to do with human communication, but, on close inspection, very little, and it distorts the act of communication beyond recognition.

In real human communication, the sender has to be not only in the sender position but also in the receiver position before he or she can send anything.

Communication can be something you do to someone else, and it can fundamentally act on the sender, not just the receiver. When we speak or write, it does not just transfer parcels of information to another person, but it fundamentally changes us. Writing enables entirely new ways of thinking. There was no such thing as a “list” in primarily oral cultures, nor was there the concept of “looking something up.” Where could anything be “looked up?” The closest conceptual match would be asking your wisest elder.

An oral person’s knowledge is organized by narrative. It is not possible to represent or maintain strict hierarchies or higher-level abstractions in narrative. Orality requires immediate social and physical context, and is therefor more socially and communally grounded.

Literacy enables greater individualism and precision. Authors often attempt context-independence such that their work does not rely too much on the audience’s context. This enables precise, systematic reasoning divorced from immediate circumstances. Writing encourages thinking of works as self-contained units with clear beginning, middle, and end.

Next, we’ll build on these ideas and apply them to programming languages.

Orality and Code

How do programming languages relate to orality? At first glance, code might seem to belong entirely to literature, having little in common with orality. It’s written, stored, and used as text, so it must be literature. While I mostly agree that code is an extension of and companion to literature, I was surprised by the connections with orality. Code is more difficult to separate from its context than literature, it’s inherently active, and it relies on formulaic patterns for communication between communities.

There is no word to describe for orality what “literature” describes for literacy. To stand in for such a word, we will use the “oral arts,” meaning works of communication that are native to primally oral people. This includes public rhetoric, recitations of songs, oral histories, and the like.

Cognitive Tech in Orality and Code

Programmers use cognitive technologies similar to oral formulas. Design patterns, comments, and naming conventions are shared devices that make programs interpretable. A future generation with different tools and more advanced programming literacy might look at our tools and ask: “Why all the repetition? Why the mythology? Just tell the computer what you want it to do.” But these metaphors and repetitive structures can and do help us manage complexity and communicate our intent.

Consider object-oriented programming. OOP is a metaphor we use to reason about programs: we talk about objects having attributes and behaviors, but those are conceptual tools, not facts about the machine or its behavior. We use the metaphor because it helps us model complexity and communicate with others. This says nothing about how useful OOP is relative to the other tools at our disposal as programmers, only that it is a tool of cognition in use today. This mirrors the patterns used in the oral arts that allow the artists to memorize and communicate for more nuanced and voluminous information to their audience.

In his famous paper Can programming be liberated from the von Neumann style? (Backus78), John Backus questions the popular contemporary cognitive technologies used for describing programs, which he ironically had a hand in forming. Backus argues that the prevailing mental models are intellectually limiting and that a more functional model would be more effective:

Conventional programming languages are growing ever more enormous, but not stronger. Inherent defects at the most basic level cause them to be both fat and weak: their primitive word-at-a-time style of programming inherited from their common ancestor – the von Neumann computer, … their division of programming into a world of expressions and a world of statements, their inability to effectively use powerful combining forms for building new programs from existing ones, and their lack of useful mathematical properties for reasoning about programs.

He describes two concepts as particularly limiting:

- Storing data to particular locations, and

- sharp distinctions between statements (which have effects and do not have results), and expressions (which have conceptual results but typically not effects).

He argues that these noetic structures hold us back from effective construction of programs. They are related to the behavior of machines, but are not inherent to programming languages, so as people working on programming languages, we should not be bound by them.

Surely there must be a less primitive way of making big changes in the store than by pushing vast numbers of words back and forth through the von Neumann bottleneck. Not only is the tube a literal bottleneck for the data traffic of a problem, but, more importantly, it is an intellectual bottleneck that has kept us tied to word-at-a-time thinking instead of encouraging us to think in terms of the larger conceptual units of the task at hand.

Note that the technology Backus refers to is not really about programming languages as they are transcribed, but the noetic structures they presume. Just as oral people cannot effectively and hierarchically organize knowledge without writing, neither can programmers constrained by a von Neumann model of programming effectively organize programs. Backus essentially argues that different cognitive technologies (like functional programming and expression-oriented semantics) should be made available to programmers, and these technologies should shape our cognition.

A language that doesn’t affect the way you think about programming, is not worth knowing.

The Social Nature of Code

To speak, you have to address another or others. People in their right minds do not stray through the woods just talking at random to nobody. Even to talk to yourself you have to pretend that you are two people… I avoid sending quite the same message to and adult and to a small child. To speak, I have to be somehow already in communication with the mind I am to address before I start speaking… I have to sense something in the other’s midn to which my own utterance can relate. Human communication is never one-way.

Although it’s tempting to consider programming at least as individual an activity as reading and writing, I argue it is far more social. Some programs are written and used in isolation, but the vast majority of useful programs are written by one person or group and used by many others. For programs and libraries to be successful, they need people to use them and technical contexts to facilitate them.

Most programs exist in a social and technical context. People must be able to communicate with each other about them. Physical infrastructure must be provided to facilitate their use. In this sense, programs resemble the town-square. I’m reminded of The Cathedral and the Bazaar: Musings on Linux and Open Source (Raymond99). In contrast, literature can be constructed entirely in isolation (and consumed in a similar manner), as was the case with 19th century philosophers like Kierkegaard.

Because speech is inherently temporary and active, it is also inherently context-bound. Prior to technologies like radio and TV, speech could only be delivered and consumed in-person, in a specific context, with other people. This inherently contextualizes speech, far more than literature or programs, though I argue here that programs are more context-bound than literature.

A Hybrid of Artifact and Action

Code has remnants of both oral art and literacy: it is an artifact of thought with a physical representation, but it inherently describes an action, almost like a spell, and always embedded in a technical context in which the code functions.

Like orality, it requires context and (usually) community to be meaningful. It exists as performance/action, not just description. Programmers use mythology and metaphor to structure their thoughts.

Like literacy, it is written, stored, and transmitted as text. It can be organized hierarchically and precisely, and it can lend itself to isolation. These points are discussed in the following post.

I believe this has something to do with the difficulties some people have with learning to program. New engineers must build mental models ex nihilo– there are not always metaphors that map the machine’s behavior to the physical world. Appropriate mental models must be built up through time and exposure. This hybrid nature suggests programming is a genuinely new form human cognition.

The next post explores the literary characteristics of programs and how they extend beyond traditional reading and writing.

Literacy and Code

Orality and code have a surprising number of characteristics in common. However, the more natural comparison is, of course, literature.

Literature leaves a physical trace in the world, while orality does not. Programming languages are unique because they do leave a trace on the world (the source code of the program is stored somewhere), but the physical record is only part of the program, unlike literature. Programs are inherently actions, like the oral arts, yet they are cast in text. Literature simply describes or prescribes; code both describes and performs, closer to a magic spell than a textbook. Code is impotent and incomplete without being executed.

Syntax and Cognition

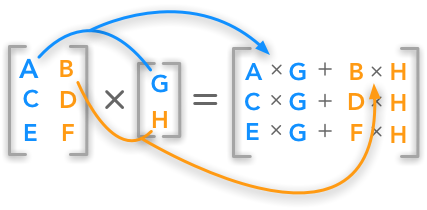

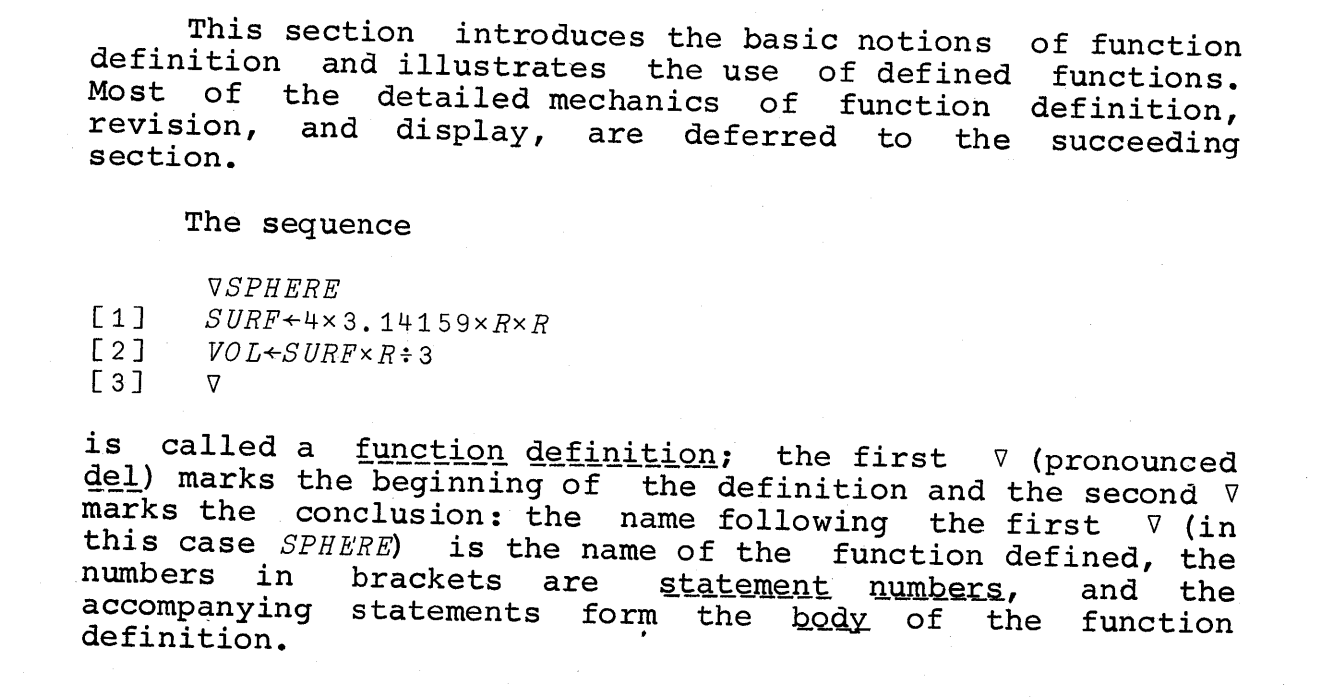

Some aspects of programming language design are purely literate concepts, such as syntax. In Notation as a Tool of Thought (Iverson79), Iverson considers how syntax shapes cognition. He begins with his philosophical foundations:

By relieving the brain of all unnecessary work, a good notation sets it free to concentrate on more advanced problems, and in effect increases the mental power of the race.

That Language is an instrument of human reason, and not merely a medium for the expression of thought, is a truth generally admitted.

He points out some of the advantages programming languages have over regular literature:

Nevertheless, mathematical notation has serious deficiencies. In particular, it lacks universality, and must be interpreted differently according to the topic, according to the author, and even according to the immediate context. Programming languages, because they were designed for the purpose of directing computers, offer important advantages as tools of thought. Not only are they universal (general-purpose), but they are also executable and unambiguous. Executability makes it possible to use computers to perform extensive experiments on ideas expressed in a programming language, and the lack of ambiguity makes possible precise thought experiments. In other respects, however, most programming languages are decidedly inferior to mathematical notation and are little used as tools of thought in ways that would be considered significant by, say, an applied mathematician.

Programming languages are executable, deterministic, and unambiguous. These characteristics offer cognitive tools that literature does not.

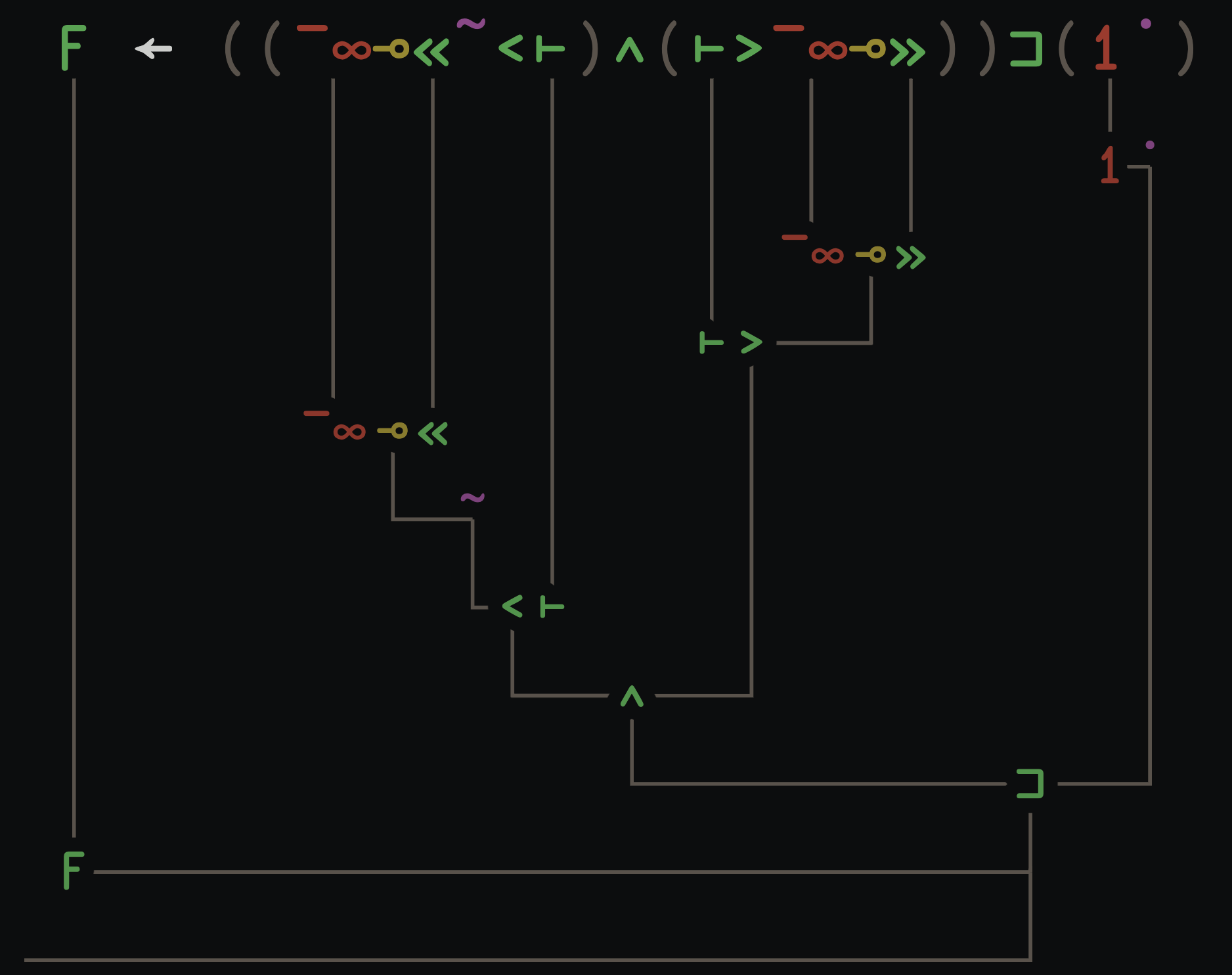

APL, Glyphs, Ideographs

Iverson’s thesis is that the most useful concepts of mathematical notation and programming languages come together in his programming language APL. Those unfamiliar with APL’s syntax may consider it a cognitive inhibitor rather than an aid, yet the language’s terse syntax does provide an interesting study of the capability of a language’s syntax to affect the thought process of its users.

APL’s dictionary is comprised of glyphs glyphs that provide visual hints to their function instead of English words.

⌈0.5

1

⌊0.5

0

The glyph ⌊ visually suggests taking its operand from midpoint down to the floor, reminding the programmer of its function (“round down”). With two operands, these glyphs compute minimum and maximum, evoking similar imagery:

1⌊2

1

1⌈2

2

The transpose operator ⍉ suggests spinning the matrix around its diagonal. One imagines themselves holding both ends of the diagonal line and spinning the circular part of the glyph around it, swapping the rows and columns:

M ← 3 3 ⍴ ⍳9

M

1 2 3

4 5 6

7 8 9

⍉M

1 4 7

2 5 8

3 6 9

In this way, APL provides a dictionary of flexible hieroglyphs that give the programmer visual hints as to their function. APL’s glyphs function like ideographs like “1” and “2”: a Chinese speaker and an English speaker will not understand each other’s explanations of transposition, but both visually understand the meaning of “⍉”. The characters communicate meaning independent of spoken language. Gesture serves a similar function in oral peoples; neighboring communities may not be mutually comprehensible, but they can point and motion with their hands to communicate.

Proponents of APL (myself included) can only hope for adoption to branch out as presently popular forms of writing have:

When a fully formed script of any sort, aphabetic or other, first makes its way from outside into a particular society, it does so necessarily at first in restricted sectors and with varying effects and implications. Writing is often regarded at first as an instrument of secret and magic power.

If the declining popularity of Chinese pictographic scripts is any indicator, a simple alphabet is an aid to adoption and modern offshoots of APL are not well-positioned to achieve widespread adoption.

Semantic Editing

Modern programming environments offer editing tools with no comparison in a typical written setting, thanks in particular to the determinability of code. Formally defined grammars allow procedural analysis of code that is not possible in prose. The earliest examples of this are seen in the Lisp community, where the editing environment was an early focus.

As the experience of working with text as text matures, the maker of the text, now properly an ‘author’, acquires a feeling for expression and organization notably different from that of the oral performer before a live audience… The writer finds his written words accessible for reconsideration, revision, and other manipulation until they are finally released to do their work. Under the author’s eyes the text lays out the beginning, the middle and the end, so that the writer is encouraged to think of his work as a selfcontained, discrete unit, defined by closure.

Just as the transition from orality to literacy offered new tools for construction of thought, so too do modern editors. In particular, language servers make the entire semantic context of a program available to the programmer directly, freely searchable and modifiable. In prose, the best we have is tools to look up the definitions of words, but there is nothing like an English language server that would allow an author to navigate text as a semantic tree. Authors can construct the semantic information themselves by organizing their work into chapters or files, but the burden is on the author to maintain the structure. Editors understand the full technical context and update continuously.

Print, as has been seen, mechanically as well as psychologically locked words into space and thereby established a firmer sense of closure than writing could.

As print “locked words into space,” editors bring programs to life.

Literature in Programming

Two subsets of programming are unique in their relation to literacy. Comments and literate programming are both inherently works of literature embedded in or combined with code.

Comments exist in an interesting overlap of literacy and code. They are (usually) strictly literary devices embedded in code, with no effect on the resultant program. In this case, code completely subsumes the category of literature, since any work of literature can theoretically exist inside a program. Yet, they are not typically meaningful outside the technical context they physically reside in.

Donald Knuth studied the boundary between code and literature in Literate Programming:

I believe that the time is ripe for significantly better documentation of programs, and that we can best achieve this by considering programs to be works of literature.

Let us change our traditional attitude to the construction of programs: Instead of imagining that our main task is to instruct a computer what to do, let us concentrate rather on explaining to human beings what we want a computer to do.

For a more literature-forward analysis of programs, Literate Programming is a must-read. Knuth dealt with the specifics of typesetting documents in great detail across several volumes, such as his 1999 work, Digital Typography.

Digital typography was even the titular motivation for Dennis Ritchie to begin work on the Unix operating system at Bell Labs; although he probably just wanted an excuse to work on his own OS after the failure of the Minix operating system, developing a proper digital typesetting program for the Lab was at least the rationale for funding his effort.

Programs are inherently social and embedded in a social and technical context. If the program is to be of use to anyone other than the author, some portion of the technical context required to run the program must be communicated to other people. If the program is to be extended for any purpose other than the original one, the logic the program follows must be interpretable to someone else. All but the simplest programs require communication between people to be useful.

Effects on Cognition

Ong avoids moralizing the distinction between oral and literate people, avoiding even implicitly moral terms like “illiterate”. Nonetheless, he does not shy away from the benefits of literacy:

And elsewhere:

Orality is not an ideal, and never was. To approach it positively is not to advocate it as a permanent state for any culture. Literacy opens possibilities to the word and to human existence unimaginable without writing. Oral cultures today value their oral traditions and agonize over the loss of these traditions, but I have never encountered or heard of an oral culture that does not want to achieve literacy as soon as possible. Yet orality is not despicable. It can produce creations beyond thereach of literates, for example, the Odyssey. Nor is orality ever completely eradicable: reading a text oralizes it. Both orality and the growth of literacy out of orality are necessary for the evolution of consciousness.

If literacy changed cognition by enabling abstract thought, hierarchical organization, and precise specification, what might code do?

Rather than manipulating text alone, the programmer works on semantic structure with immediate feedback. Thought experiments are executable directly and provide instant feedback. Programmers learn to build on top of abstractions and communicate with other people in new ways.

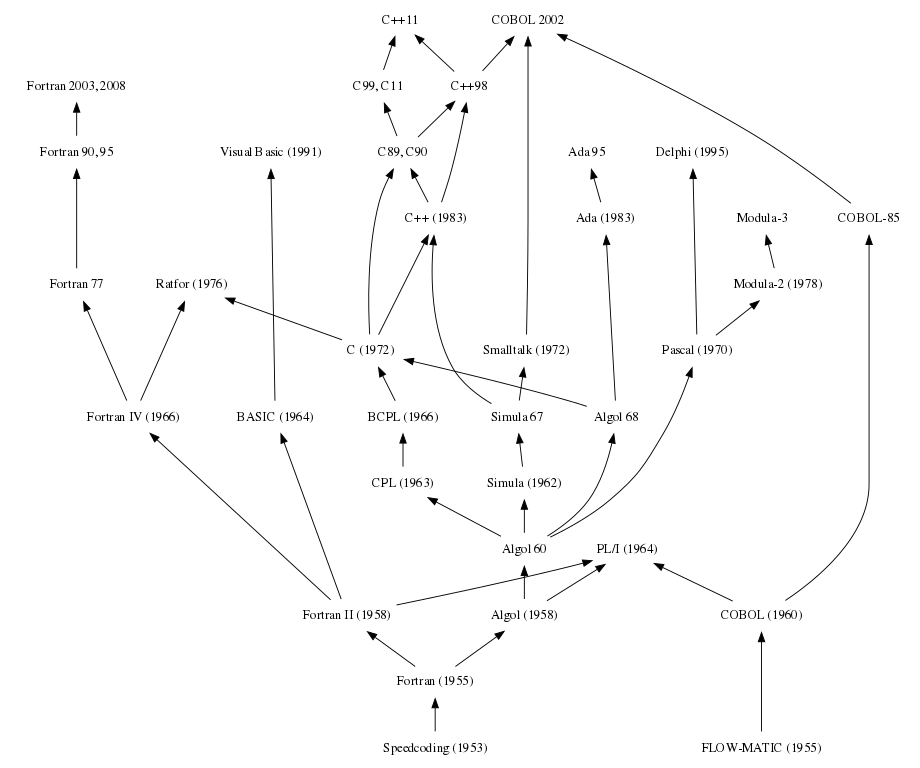

Compare the history of programming languages to the history of literacy. Literacy is at the very least several thousand years old while programming languages are about one hundred years old, at most. The difference between the Automatic Programming of John Backus and Grace Hopper and modern programming languages is astounding. What differences might we see in the next thousand years? What effects might those differences have on our cognition?

Conclusion

Programming languages exhibit characteristics of both orality and literacy.

- Like oral performance, code is inherently contextualized, formulaic, and active.

- Like literature, it is written, textual, revised, and precise.

They could only have been designed by a literate mind, yet they are not fully subsumed by literature. They are text that acts on the world directly, not by virtue of influencing people.

People who write code employ oral and literary cognitive technologies in addition to entirely new ones that have not been academically analyzed as far as I can tell. Reading Ong alongside Backus, Knuth and Iverson provides anthropological, social, technical, historical and philosophical context for how programming languages came to be, and how we might design new ones.

Debates about programming languages and paradigms are not solely technical disagreements, but reflections of different ways of organizing thought and extending cognition, analogous to the shift from orality to literacy itself. Programming language design is about communication and human cognition. When we design a new language or choose between paradigms, we are not arbitrarily choosing how to give instructions to computers; we are building a cognitive toolbox that shapes how programmers think and how communities coordinate.

Links

- Backus78: Can Programming Be Liberated from the von Neumann Style?

- Boole54: Laws of Thought

- Ong82: Orality and Literacy

- Fettes23: Book Review: Orality and Literacy: The Technologizing of the Word

- Iverson79: Notation as a Tool of Thought

- Knuth84: Literate Programming

- Perlis: EPIGRAMS IN PROGRAMMING

- Raymond99: The Cathedral and the Bazaar

- Sturgill12: Review: Orality and Literacy by Walter J. Ong

- Tao08: Use good notation

- Whitehead11: An Introduction to Mathematics

Notes on Orality and Literacy

Loose collection of personal notes from reading Ong’s Orality and Literacy. See the prior post for my collected thoughts.

mnemonics and formulas

- Does code remind me more or orality or literacy? Simply reading the technical text does not contain the full context required to interpret it, more similar to orality. The organizational and technical context are immediate and often required for interpretation.

- Orality is inherently active, non visual, and contextualized. It only ever exists as an event in a moment in a context, while literature is an artifact of thought, independent of a context. Code is a hybrid of both: an artifact of thought, but inherently describing an action, almost like a spell, and always embedded in a technical context in which the code functions.

- Sound only exists as it is going out of existence. There is no stopping or having sound. It is inherently perishing. Thoughts are conceived of in primarily oral cultures as such.

- However complex and rigorous, primarily oral thoughts cannot be independent and purely logical, because as soon as the thought is had, it ceases to exist, absent some mechanism for committing the thought to memory and transferring it to others.

- Code is different from plain ’ol literature describing or prescribing action; it is action, (via a translator). It is not a call-to-action, it is action in some fundamental way.

Characteristics of Orally Based Thought

- Additive rather than subordinative

- Orality favors the cliche because it’s a memory aid and ensures the message will travel further.

- there’s lots of thematic repetition, and they must stay intact. Their persistence is evidence of the effectiveness of the cliche in allowing the moral to travel through time and space. It’s not low-minded to use them, it’s part of the medium. Analysis/deconstruction is risky-possible destroying the message forever if the analysis propagates through too many minds such that the message ceases to travel organically. The same can be said for “redundant” or “copious” continuity.

- it’s like the page numbers or paragraphs. it’s not redundant to leave that extra space there, because it’s really useful in helping the reader keep track of what’s going on. Future iterations of humanity might look back at paragraph line breaks and other literary tools of thought and organization and consider them to be “silly” or “primitive” because they are no longer useful in whatever medium comes after writing.

- sparse linearity is actually unnatural and recent, only able to persist in human communication in the presence of longstanding artifacts of thought.

- repetition is particularly useful in live communication because of the nature of sound. It’s easy to miss a word here and there,

so repeating the message often helps the audience keep track and refine their understanding. It’s not primitive at all.

- also gives the speaker a chance to mindlessly repeat their message while they consider what to say next.

- characterized by conservatism/traditionalism

- bc what is not actively conserved is immediately lost.

- writing can also be conservative (laws were frozen in time as soon as they were written down)

4: Writing Restructures Consciousness

- writing establishes “context free” language, which is an oxymoron

- no way to properly refute text. it always says the same thing as before.

- Most persons are surprised, and many distressed, to learn that essentially the same objections commonly urged today against computers were urged by Plato in the Phaedrus and in the Seventh Letter against writing. Writing, Plato has Socrates say in the Phaedrus, is inhuman, pretending to establish outside the mind what in reality can be only in the mind. It is a thing, a manufactured product… Secondly, Plato’s Socrates urges, writing destroys memory. Those who use writing will become forgetful, relying on an external resource for what they lack in internal resources. Writing weakens the mind. Today, parents and others fear that pocket calculators provide an external resource for what ought to be the internal resource of memorized multiplication tables… Thirdly, a written text is basically unresponsive… Fourthly, in keeping with the agonistic mentality of oral cultures, Plato’s Socrates also holds it against writing that the written word cannot defend itself as the natural spoken word can: real speech and thought always exist essentially in a context of give-and-take between real persons.

- Those who are disturbed by Plato’s misgivings about writing will be even more disturbed to find that print created similar misgivings when it was first introduced.

- One weakness in Plato’s position was that, to make his objections effective, he put them into writin, just as one weakness in anti-print positions is that their proponents, to make their objections more effective, put the positions into print. The same weakness in anti-computer positions is that, to make them effective, their proponents articulate them in articles or books printed from tapes composed on computer terminals. Writing and print and the computer are all ways of technologizing the word. Once the word is technologized, there is no effective way to criticize what technology has done with it without the aid of the highest technology available.

Plato was thinking of writing as an external, alien technology, as many people today think of the computer. Because we have by today so deeply interiorized writing, made it so much a part of ourselves, as Plato’s age had not yet made it fully a part of itself, we find it difficult to consider writing to be a technology as we commonly assume printing and the computer to be.

- Writing is fundamentally unnatural, while oral speech is emergent.

Like other artificial creations and indeed more than any other, it is utterly invaluable and indeed essential for the realization of fuller, interior, human potentials. Technologies are not mere exterior aids but also interior transformations of consciousness, and never more than when they affect the word.

- Technologies like the orchestra are transformative and unnatural and take us to new heights.

- writing is inherently individual. take a teacher and a classroom and put them in conversation and the whole group participates. Have them all read and they descend into their own universes, entirely alone.

- We are so, so early in the history of programming. Consider how primitive the literature was in the advent of the written word and the alphabet. Consider how difficult it would be for Plato to consider the applications of literature we have today, and how deeply literacy permeates our collective cognition. So too are we in the very beginning of what may mature into a new offshoot of literature.

To make yourself clear without gesture, without facial expression, without intonation, without a real hearer, you have to foresee circumspectly all possible meanings a statement may have for any possible reader in any possible situation, and you ahve to make your language work so as to come clear all by itself, with no existential context. The need for this exquisite circumspection makes writing the agonizing work it commonly is.

-

Learned Latin reminds me of Algol. It’s divorced from reality, with hardly ever a full compiler or programming environment. It was purely learned. It was pronounced differently all over, but always written the same. For over a thousand years, educated peoples did all of their scientific and mathematical and abstract thinking in Latin, but hardly spoke it, if ever. Perhaps due to Algol’s academic origins and planned nature. It did not grow out of a technical setting, but was designed by committee.

-

Literate programming: mention Knuth’s Typesetting books, Jupyter notebooks, doctest, and Org mode

Mmoreover, as earlier noted, composition on computer terminals is replacing older forms of typographic composition, so that soon virtually all printing will be done in one way or another with the aid of electronic equipment. And of course information of all sorts electronically gathered and/or processed makes its way into print to swell the typographic output.

At the same time, with telephone, radio, television and various kinds of sound tape, electronic technology has brought us into the age of ‘secondary orality’. This new orality has striking resemblances to the old in its participatory mystique, its fostering of a communal sense, its concentration on the present moment, and even its use of formulas (Ong 1971, pp. 284–303; 1977, pp. 16–49, 305–41). But it is essentially a more deliberate and selfconscious orality, based permanently on the use of writing and print, which are essential for the manufacture and operation of the equipment and for its use as well.

- Notion that the “center of mass” of communication is what determines cognition; someone may be somewhat shaped by literacy but is primarily oral.

- Today’s orality is distinctly less agonistic than earlier orality. Comparisons between today’s presidential debates and 19th c debates that were in public with not amplification equipment. Trend sorta broken by trump.

6: Oral Memory, story line

Similar to what we need to do in literature, in code in order to make sense of large systems. One might ask the same of us: why use this mythology of objects and such, when we can express our ideas in more straightforward (atoms of meaning)?

Although it is found in all cultures, narrative is in certain ways more widely functional in primary oral cultures than in others. First, in a primary oral culture, as Havelock pointed out (1978a; cf. 1963), knowledge cannot be managed in elaborate, more or less scientifically abstract categories. Oral cultures cannot generate such categories, and so they use stories of human action to store, organize, and communicate much of what they know.

Second, narrative is particularly important in primary oral cultures because it can bond a great deal of lore in relatively substantial, lengthy forms that are reasonably durable—which in an oral culture means forms subject to repetition. Maxims, riddles, proverbs, and the like are of course also durable, but they are usually brief. Ritual formulas, which may be lengthy, have most often specialized content.

Thus an oration might be as substantial and lengthy as a major narrative, or a part of a narrative that would be delivered at one sitting, but an oration is not durable: it is not normally repeated. It addresses itself to a particular situation and, in the total absence of writing, disappears from the human scene for good with the situation itself.

In a writing or print culture, the text physically bonds whatever it contains and makes it possible to retrieve any kind of organization of thought as a whole. In primary oral cultures, where there is no text, the narrative serves to bond thought more massively and permanently than other genres.

And yet, with the most sophisticated mechanisms of organizing information, we still rely on metaphor and picture.

In another sense, we are so far beyond attempting to come up with narratives and metaphors, that it is nearly impossible for new CS grads to figure out what’s going on. All of us in industry have built up mental concepts ex nihilo and rely on them day to day. Other engineers understand us but it’s so difficult to build up from nothing without massive amount of exposure since there are not natural-world or oral/narrative corollaries to build upon. Maybe this is what will continue to expand in the future of literacy as we further co-develop with computers and programming languages.

Is it so difficult to keep large systems present in our heads that we too must construct narratives?

What made a good epic poet was, among other things of course, first, tacit acceptance of the fact that episodic structure was the only way and the totally natural way of imagining and handling lengthy narrative, and, second, possession of supreme skill in managing flashbacks and other episodic techniques… If we take the climactic linear plot as the paradigm of plot, the epic has no plot. Strict plot for lengthy narrative comes with writing.

As the experience of working with text as text matures, the maker of the text, now properly an ‘author’, acquires a feeling for expression and organization notably different from that of the oral performer before a live audience. The ‘author’ can read the stories of others in solitude, can work from notes, can even outline a story in advance of writing it. Though inspiration continues to derive from unconscious sources, the writer can subject the unconscious inspiration to far greater conscious control than the oral narrator. The writer finds his written words accessible for reconsideration, revision, and other manipulation until they are finally released to do their work. Under the author’s eyes the text lays out the beginning, the middle and the end, so that the writer is encouraged to think of his work as a selfcontained, discrete unit, defined by closure.

This is extended even further in code. Consider the applications of

editor integrations. In a properly configured text editor,

a variety of unique tools are available to developers.

Words and sections of text appear in different colors; some may be

typeset as italic or bold.

Should the developer change the text such that the semantic meaning

of the aforementioned text changes, the typesetting may too change,

reflecting the new role the text plays in the context of the program.

Developers are able to jump directly from a set of characters to their definitions,

or simply hover their mouse over some text to learn more about it or see

any potential errors resulting from the text.

Consider how remarkable of an extension

to literacy this is; can a reader “jump” from some point in a

narrative text to the definition of its concept? We can look up definitions

of words, or search the internet, but in many editors, the full semantic

context of a set of characters is available to the author.

There is no tool to analyze and navigate the regular text as a semantic tree of

information.

The language-server (for example, clangd for C or C++)

makes the semantic structure and full context

of my program available to the developer in an instant,

updating as soon as changes are made.

I can think of no corollary in regular literature, because the

semantics are not similarly determinable.

Print, as has been seen, mechanically as well as psychologically locked words into space and thereby established a firmer sense of closure than writing could.

Just as print froze words in time space, code brings them to life. They are inherently actions.

- Detective novels (and the like) could only have come into play with literacy bc they require too much precision for orality.

Insofar as modern psychology and the ‘round’ character of fiction represent to present-day consciousness what human existence is like, the feeling for human existence has been processed through writing and print. This is by no means to fault the present-day feeling for human existence. Quite the contrary. The present-day phenomenological sense of existence is richer in its conscious and articulate reflection than anything that preceded it. But it is salutary to recognize that this sense depends on the technologies of writing and print, deeply interiorized, made a part of our own psychic resources. The tremendous store of historical, psychological and other knowledge which can go into sophisticated narrative and characterization today could be accumulated only through the use of writing and print (and now electronics). But these technologies of the word do not merely store what we know. They style what we know in ways which made it quite inaccessible and indeed unthinkable in an oral culture.

7: Some Theorems

- Ong is so forthright about all the subtopics of this book that should be investigated for further study.

Over the centuries, the shift from orality through writing and print to electronic processing of the word has profoundly affected and, indeed, basically determined the evolution of verbal art genres, and of course simultaneously the successive modes of characterization and of plot

Man, how much more will the LSP and other editor integrations change the way we think? What about AI editor integration’s effects on cognition? Not only do we have recluse, individualistic reading and writing, but we have access to another mind, reading and writing alongside us, seeing everything we see.

Marshall McLuhan "The medium is the message."

Paradoxically, Plato could formulate his phonocentrism, his preference for orality over writing, clearly and effectively only because he could write.

To speak, you have to address another or others. People in their right minds do not stray through the woods just talking at random to nobody. Even to talk to yourself you have to pretend that you are two people… I avoid sending quite the same message to and adult and to a small child. To speak, I have to be somehow already in communication with the mind I am to address before I start speaking… I have to sense something in the other’s midn to which my own utterance can relate. Human communication is never one-way.

- Some notes on why the “media model” is not the right way to think about communication. we aren’t sending nuggets of information down a tube to be decoded by the other person. We can do something to someone else and we are shaped by the media as well.

History of Compilers

10/23/2025

I feel that the history of compilers has not yet been as a cohesive story, but only as a subplot of other histories. The links between different threads of compiler history are interesting and they provide a unique perspective on computing that hasn’t been satisfactorily explored yet, in my opinion. This PDF is a collection of my personal research on the book that I wish existed–one on the history of compilers in particular.

Link to the PDF

David MacQueen on the History of Compilers

11/4/2025

In this interview, David MacQueen and I discuss the history of compilers, especially as it relates to the evolution of Standard ML, but also discussing changes he would like to make to the language, the influence of Peter Landin and the λ-calculus, and modern compiler technologies.

I really enjoyed this conversation and I’m thankful to David for taking the time to chat. See this post for the motivation for this interview.

This is transcript was originally AI-generated, but I’m editing it by hand as I review pieces of it. Apologies for any errors.

with uh these two voice all right well i'm recording on my side too and you're

you got yours so i think we can just uh hop back in i'm thinking that uh maybe

we'll do a little five to ten minute intro on some of your background how you

got into working on ml and then you know maybe a 30 35 minute maybe a 45 minute

total conversation and the most of it we'll just be chatting about the history

of compilers maybe we'll talk a bit about you know the logic for computable

functions and how that led to ml as this i can give you a bit of ml background

sure yeah yeah so yeah so my name is dave mcqueen i was trained as a

mathematical logician in computability theory at mit i was in the air force for

a while then i went to edinburgh as a postdoc in 1975 and spent almost five

years in edinburgh and that's where i turned into a computer scientist and i

worked with people like rob burstle who was my boss and rob miller and

basically the theory of programming languages uh my background in mathematic

mathematical logic was uh relevant and useful anyway so um edinburgh was a kind

of special place in the 70s in the 70s and since then in terms of european uh

theoretical computer science which is quite different from american theoretical

computer science in the sense instead of focusing on algorithms and complexity

theory in the u.s uh european theoretical computer science tended to uh look at

um connections between logic and and computer science and in particular uh uh

the theoretical foundations of programming languages and um so the lambda

calculus was a major inspiration source of ideas right back church around 1930

and later ideas uh about types uh that were introduced by alonzo church and uh

well introduced originally by uh russell and whitehead around 1911 uh as a

defense against uh um paradoxes like russell's paradox and uh then uh turned

into a kind of augmented version of the lambda calculus called the simply type

lambda calculus by church in a paper in 1940 uh and uh and uh then kind of

rediscovered and popularized by some computer scientists back in the uh 60s

mostly uh christopher strachey uh and uh peter landon um both of whom had kind

of uh non-standard informal kinds of education because there wasn't uh computer

science discipline in the 1950s uh there were things going on at cambridge

manchester uh around turing and and so on but uh and early at bletchley park

earlier in bletchley on the code breaking uh technology mm-hmm so that was the

kind of british context and there were people like tony hoar that were involved

in this uh program language you know semantics and right uh really work on

verification and applying logic to programming yeah and if if i can interject

so you know around this time i'm i feel like today it's a little bit hard to

overstate the influence of the development of algol had on all of these

languages and there were you know called by name and called by value in these

things and then a lot of landon and strachey's papers were a little more strict

evaluation semantics is that right maybe you can speak to the development of

algol and there was the algol group um there were uh i don't recall whether

strachey or landon had direct connection one moment uh yeah no worries yeah

he's getting a call from my wife i'm just hello i'm uh i'm in the midst of a

remote discussion okay about compilers very quickly uh i'm at pedro's with sin

we finished our shopping and we're eating lunch okay no problem right bye she's

having lunch with her friends good to know good to glad we got that settled uh

anyway um so i'm kind of vague about uh you know obviously um uh john bacchus

uh peter norr from denmark um um uh the algol 68 guy uh so there was a group uh

sure involved in in the algol development uh that was late 50s through early

1960s and then there were some uh interesting there was probably the first book

about compilers was the description of the algol 60 compiler mm-hmm but uh and

strachey's work around the mid 1960s was part of it involved uh definition of a

new kind of systems programming language she called cpl right right epl and

that was an algol um 60 derived language uh and um uh it had i don't think

peter landon was involved directly in cpl yeah i think he was not on the papers

but i think around the same time he was uh busy translating he had a company it

was a kind of software consulting company gotcha in london and he hired um uh

peter landon and peter landon uh got very enthusiastic about the lambda

calculus and wrote a burst of very important papers from about 64 to 66 uh one

was uh the next 700 programming languages where he described a kind of uh

syntactically sugar sugared lambda calculus that he called i swim if you and um

then he wrote about uh a abstract machine for evaluating uh that language or

the lambda calculus right uh and he also wrote a couple of papers about how to

model the semantics of algol 60 by translating algol 60 into the lambda

calculus with various right right devices and tricks and that machine was the

was it landon's secd machine yeah the secd machine that's right right it had

four components the store the stack the environment the control and the dump

which was more stack right yeah anyway um so those papers uh had a huge impact

and uh later on uh robin milner was interested in writing a uh uh proof

assistant this would be an interactive system to help uh a person uh perform

formal proofs in a in a particular logic which was called lcf or logic for

computable functions and this is at stanford right he did that work initially

at stanford and then came brought it back to edinburgh right right yeah around

1973-74 and logic for computable functions was a logic for reasoning about

functions and recursion and so on developed by um dana scott in the late um

late 60s mid to late 60s and he developed that um because he was trying to find

a mathematical model for the lambda calculus uh and he got frustrated he

couldn't find a model because of the uh fundamental problem of self-application

that the model of the lambda calculus had their support the idea of buying a

function to itself and for a long time scott couldn't figure out how to build

such a model and he decided to fall back on on a logic for reasoning about uh

these kinds of functions but then he did discover a model around 1969 which is

the scott domain model infinity model uh and uh so he never published that the

earlier paper that described the lcf logic uh kooch um i swim and all that was

the name title of the logic it it's since been published in uh logic and

symbolic computation gotcha right uh as uh sort of uh retrospective uh

publication and so now ml enters the picture because this lcf system yeah yeah

uh robin milner had a a robin milner was another sort of self-taught british

computer scientist he um had a good education uh good mathematical background

and he was teaching at a public school you know private secondary school in

britain and um he got interested in doing research and he um i think he had a

position at swansea and he managed to get a kind of a research visit for a year

or two at stanford around 1971 72 and at stanford he uh met with a guy named uh

uh wyrock uh wyrock uh wyrock and uh they together uh thought they could write

a theorem prover that would use this logic and so they the first first

generation of that was uh it was called ml no it was called uh lcf theorem

prover uh what didn't exist at that time that was at stanford they wrote the

system in lisp and lisp and lisp was the meta language right right pro language

if you like in the language for writing uh proof tactics but um he then got a

position where miller then got a position to edinburgh and came to edinburgh

sometime in late 73 mid i think and um brought in a couple postdocs over the

next years got some funding and uh decided to do a proper lcf theorem prover

and instead of using lisp which was uh insecure in the sense that it was not

statically typed um he chose to use i swim as the meta language but added a

type system and a static type checker and beyond that he um kind of

rediscovered um the idea of inferring types and this was a a fairly old idea in

the logic world but it wasn't known in computer science so no they essentially

rediscovered it and the um ml metal language for the lcf theorem prover and

that was in the mid to late 70s they kind of finished this theorem prover

around 1978 and they published a uh a paper um on the theorem prover and then

miller published a paper on the type system and so the the purpose of you know

they had lisp and ice women landed his original papers dynamically typed right

but the the issue was was a kind of thought experiment language uh although i

swim uh after he left um working for strachey he went to new york and he worked

at univac for a while but he also had a visiting position for a while at mit

and so he taught people at mit about ice swim and there was actually an

implementation effort led by evans and wolzencraft at mit in the late 60s and

they called their implementation pal i forget what pal stands for but it was

basically ice swim and so uh but i don't think uh the pal uh work had any

visibility or influence in edinburgh um but the reason that lisp didn't work

very well or even dynamic ice swim was because if you you know made some

mistake in your list code you could get a a proof that was wrong right right so

the point uh static type checking was that there would be an abstract type of

theorem and the only way you could produce a value of type theorem um is to uh

use inference rules that were strongly typed and uh the strong typing uh

guaranteed in principle the correctness of the proof so you would be able to

generate any unprovable or non-proved theorems other than by uh application of

the proof rules the inference rule uh and so it was logical security that the

type system was providing but in order to have some language that was um closer

to lisp in in flexibility uh they'll never have the idea of introducing this uh

automatic uh type inference system and as i said uh earliest uh work on

something like that was uh 1934 by by um curry uh and then later on curry in

the 50s when he was writing his book about uh um about uh combinator logic he

had a chapter in that book about uh what he called functionalities for for

conduct combinators which were essentially his term for types uh uh in the 1934

paper he kind of then formally um described um described something that you

would call type inference and then came back to that uh later on in in the 60s

and then roger hindley a logician at uh swansea uh took that up and wrote a

paper about it as well and this was before um milner knew about it so later on

i mean had published his paper about it somebody had told him that there was

this earlier work by curry and hindley but in fact there was the the first

actually um published algorithm for type inference was uh produced by max

newman in 1943 and max newman was turing's uh mentor at cambridge with

professor of mathematics and uh uh uh was interested in things like goodless

proof and foundational issues and uh he was the one who sent turing off to

princeton to work with perch and get a phd and um later on he became the head

of the manchester uh computing laboratory and hired turing there toward the end

of his life anyway uh so max newman in a different context had thought about

type inference and wrote a paper about it and he was his main target was type

inference for coin's new foundation which was a kind of uh alternate

formalization for sort of logic but he also had a section that did uh applied

the ideas to lambda calculus anyway so that uh early work by newman was

interesting but uh nobody knew about it and henley rediscovered it back many

years later um anyway so this is a case where there was parallel work in logic

and computer scientists but they weren't aware of each other well and at this

point the lambda calculus and combinatory logic are sort of cousins right

solving a lot of the same problems right they're equivalent right combinatorial

logic is essentially the lambda calculus without variables and you have a bunch

of uh combinator reproduction rules instead of the beta reduction that would

calculate right right so um move my phone a little bit closer to make sure

anyway um so that was the kind of background cultural um situation in edinburgh

in the late 70s and i've arrived at 1975 um but i was working at that time

there was a computer science department and rob milner and his group of

postdocs and grad students were in the computer science department and they

were at the king's buildings campus which was a kind of science campus three

miles south of the rest of the university which was in the center of town uh

and um there was a school of artificial intelligence uh so the early blossoming

of artificial intelligence around edinburgh and i worked for ron burstle who

was in the school of artificial intelligence and uh some of some of his other

postdocs and students and uh um um we developed a kind of parallel language

called hope which was a um language derived from or evolved from a rather

simple first order uh functional language that kind of resembled girdle's uh

generally recursive equations of uh 1930 uh which were part of the uh leading

to the incompleteness still anyway um we developed this language called hope

and we borrowed some ideas from ml in particular the general type system and

the type inference and type checking uh approach and uh it had its own um

special thing which was later called algebraic data types and uh so algebraic

data types came came from hope um based on part of the evolution from this

first order language and then um in the 80s uh there was a another effort to

design uh a kind of first class uh functional programming language which uh

robin initially called standard ml because it was trying to standardize and

unify several um threads of work including the ml that was part of the lcf's

theorem prover and the hope language that ron bristol and i had developed and

um cambridge was off the people at cambridge and inria were off uh kind of

evolving lcf and in in in the ml dialect there so uh so robin proposed this

sort of unified standard ml that had uh aspects of hope and uh and lcf ml and

through various meetings of mostly edinburgh people or people in the edinburgh

culture like myself i was then bill labs um we designed the standard ml

language from about 1983 to 86 and then robin's idea robin viewed it just as a

kind of language design effort and a major goal was to produce a formal

definition of the formal definition of the language using uh tools that had

been developed at edinburgh for operational semantics so there was a kind of

edinburgh style operational semantics and that was used to give a formal

definition of the ml uh language standard ml language and that was published as

a book by mit press 1990 um and um but raman's goal was essentially to produce

produce that language design and the corresponding formal definition published

as a book and uh as an actual programming language he had no intention of using

it and he wasn't terribly interested in implementing it uh he had a kind of

naive idea that uh uh once it was out there some commercial software house

would say we should write write a compiler for this thing uh of course that

didn't that eventually kind of happened there was a software house that uh

harlequin limited uh later on in the 80s that uh had a project to implement a

ml compiler because their boss at that time was interested in producing a lisp

compiler a ml compiler and a dylan compiler where dylan was this uh language

developed at apple that uh nobody remembers kind of a lisp dialect uh

interesting anyway uh but uh uh uh i was at bell labs and i uh got together

with the new young faculty member andrew appell at princeton who was interested

in and writing compilers and uh so we got together and uh started working in

spring of 1986 was working on a new compiler for standard ml and uh there were

other kind of derivative compilers uh there was something called edinburgh

standard ml which was kind of derived from an earlier edinburgh ml compiler

which was derived from a uh compile that luca cardelli wrote when he was a

graduate student um or dialect so the uh and then andrew was a real computer

scientist with a real computer science degree from carnegie mellon and he knew

about things like code generation runtime systems and garbage collection and so

on um and uh you know before when we developed hope for instance and when uh

the lcf ml was developed it was just piggybacking on in the lcf's case it was

piggybacking on a lisp implementation and so the kind of nitty-gritty stuff was

handled cogeneration garbage collection and so on were handled by the

underlying lisp and uh the hope implementation that i worked on was uh hosted

by a pop two which was uh an edinburgh developed uh cousin of lisp uh symbolic

programming language um rather influenced by by landon landonism maybe you can

speak to it i find it so interesting that you were at bell labs and appell at

princeton and in a roughly similar time period um i think you also had aho at

bell labs and ulman at princeton so we took very different approaches to

compilers yeah after edinburgh i went to um well i i tried to get back to

california so i had a kind of aborted so one year stay at isi in southern

california and i went to bell labs and um uh there a guy named robbie seti who

was one of the authors co-authors of the later edition of the dragon book on

compilers um he was interested he had become interested in semantics of

programming languages and so uh they hired me at bell labs and um um um aho was

there he was a department head of the kind of theory department in the center

this better way unix was developed and so there there was the hard hardcore

unix group that uh richie and thompson and kernahan and pike and later on and

uh and so on uh doug mcelroy who's my boss uh and uh uh that was the orthodox

culture at bell labs of course by the time i got there in the beginning of 81

uh unix was already a big success and well established and and so on and the

unix people basically all worked in the communal terminal room up on the fifth

floor um next to the pdp 70 right pdp 70 right right time sharing system and uh

i was never part of that group and two doubt two doors down the hall there was

this new guy um who joined bell labs a bit before i did probably 1980 or 79

named bjarne stustrup and he was working at trying to make c into an

object-oriented language because he had uh done his graduate work using simula

simula 67 at cambridge right right and simula simula simula was originally also

an extension of algol 60 but for parallel process simula was explicitly a

derivative of algol 60 right the idea the class was just uh uh almost like cut

and paste combination of blocks of algol code uh with weird control structures

um anyway uh so there was that object-oriented stuff going on down the hall but

uh uh we were on different bandwidths so there was better communication and uh

um also not very much communication between me and and the sort of unix

orthodox culture i you know used the unix system and uh one of the first things

i i did when i first arrived at bell labs to like make my computing world a

little bit more comfortable is first i ported a version of emacs to the uh

which was alien culture and to the local unix system and i also wrote a version

of yak in list so i had to figure out steve johnson's weird code for the yak um

anyway but pretty soon after that i got involved in well first uh working on

further development of hope and then starting in 83 and started working on

standard ml why do you think there was so little mutual interest between what

you were working on and the unix folks well it's kind of background and culture

the unix approach was very seat-in-the-pants kind of hacking uh approach and uh

they didn't think very formally about what they were doing basic express things

at two levels one was vague pictures on a on a blackboard whiteboard where you

had boxes and arrows between boxes and things like that and code so they they

would translate between these sort of vague diagrams and uh and uh assembly or

later c code and c is essentially a very high level pdp 11 assembly language if

as far as they're concerned um and uh you know i had a more mathematical way of

trying to understand what was going on and uh uh so it was not easy to

communicate um dennis ritchie originally had a mathematical background but uh

and as one point or another i tried to discuss concepts uh like the stream

concept in in unix with him um but uh yeah one main reason i was at bell labs

is because doug mcelroy who hired me had done some work relating to um stream

like co-routining and i had worked on that at edinburgh with with gil khan um

so that my first work in computer science so that involved functional

programming and uh lazy evaluation a particular way of uh organizing that and

it kind of connected conceptually with the notion of the stream in in in unix

you know piping pipelines uh of essentially stream processing where you know

the input and output were pipe streams um anyway um but uh srushe also didn't

connect with this orthodox culture very strongly because uh he as i said wanted

to turn c into an object-oriented language and he did that initially by writing

a bunch of pre-processor macros to define a class-like mechanism and that

evolved into c plus plus and much later c plus plus compilers it was because

initially kind of pre-processor based he had he started with the um cpp unix uh

pre-processor and evolved that into a more powerful pre-processing system was

called c front right right and at some stage it was called c with classes um

and later on c plus plus and uh and and it's interesting though now nowadays i

mean i think i think i think it's herb sutter that's working on uh something

called cpp front which is the the c front for c plus plus to to turn modern c

plus plus into yeah very interesting uh yeah i'm not very keen on macro

processing uh front ends especially if you if you're interested in types

they're very problematical right if you want to define a language that has a uh

well-defined uh provably sound type system macros are a huge mess right right

well maybe we can return to ml so we've got some sort of standard ml but at

inria there's the beginnings of what would be camel and then ocaml right so at

the beginning so the first meeting was a kind of accidental uh uh gathering of

people at edinburgh in april of 83 and robin wanted to present his uh proposed

uh standard ml that he worked on a bit earlier and it turned out that uh one of

the groups at inria that would have close connection with robin and uh research

work at edinburgh was uh gerard wetz group at uh inria and as a member of that

group there was a guy named guy kusino who worked on uh um was part of wetz

group at uh inria and he happened to be in edinburgh and kind of was involved

in these meetings as a kind of representative of the inria people and um he

took the ideas made notes and took the ideas back to edinburgh and they they

contributed a a critique or a you know feedback uh about the first uh iteration

of the design uh so in fact uh the inria people were connected to the

beginnings of standard ml through that connection and geek kusino and gerard

wetz um in the next next following year we had a second major design meeting

and um i believe kusino came to that one as well there were several people from

edinburgh and people who just happened to be around i was around um luca

cardelli was around of course rod burstall and robin milner and some postdocs

and grad students and so on uh and uh we made a decision i swim had two

alternative syntaxes for local declarations essentially a block structure one

was let declaration in expression where the in expression defined the scope of

the declarations and there was an alternate one that was used in in in i swim

which was expression where declaration so you have the declaration coming after

the expression and uh when they scale up for fairly obvious software

engineering reasons uh it's better to have the declarations before the uh scope

rather than after the scope because the scope might be uh uh you know you might

have a 10 page expression followed by the declarations on page 11. right right

it's kind of hard to understand the code and you have a kind of tug of war if

you have uh let declaration in expression where declaration to you know prime

or something which declaration applies first uh anyway so it seemed uh

unnecessary to deal with these difficulties with the wear form and so we

decided to drop the wear form and only have the let form in ml and uh this

upset the inria people um they thought that where was wonderful and they wanted

to keep wear so they decided you can't have let the anglo-saxons uh have

control of this thing so they they did their french uh branch or fork of the

design but they kept wear for a while and eventually dropped it anyway so

that's the original connection and unfortunately they also made their own you

know satisfied their own taste in terms of certain concrete syntaxes where you

just said the same thing but the concrete syntax was slightly different in

their dialect just making it um unnecessarily difficult to translate code back

and forth um and in this time shortly after some of the first standardization

efforts there was significant work done on the representation of modules is

that right yeah so that was my contribution um i had been thinking about a

module system for hope and i wrote a preliminary paper about that and it was

kind of inspired by uh work in kind of modularizing formal specifications in

software and there was kind of a school of algebraic specifications and ron

burstle was connected with that joint work with joseph gogan um who was at ibm

and later ucla um anyway so uh i was inspired by that party and also by work in

uh um type systems as logics uh curry howard i've heard of the curry howard

isomorphism things like that in the work of uh per martin luft the swedish

logician um and so there were these sort of type theoretic logics and so part

of the inspiration for module ideas came from that and part of it came from the

parallel work on modularizing algebraic specifications and uh so i wrote up a

when the first description of the first description of ml was published as a

paper in the 1984 list conference and i had a another paper in parallel

describing the module system and then over the next couple years we further

refined that and um it became a major feature of standard ml and um i'm still

thinking about that i would love to get that i would love to get to that once

we cover some of the other history in between there and some of the changes

you'd like to make because i think there's a lot of anyway implementation of

standard ml okay so uh obviously the lcf implementation was a compiler but it

compiled uh the ml code in the lcf system into lisp and then invoked the lisp

evaluator to to do execution um so code generation was basically a translation

to lisp and uh similarly that's the way i did the hope implementation by

translating to pop two and then evaluating in pop two which i believe is still

how to some extent the ocaml compiler today works i believe there's an option f

lambda that gives you these big lisp expressions uh yeah well it's easy to to

write an interpreter and right uh what happened with ocaml i won't try to

represent them sure because i wasn't involved in that but uh originally the

reason for the name camel c-a-m-l was that uh one of the researchers at inria

whose name just in you know floating in my head but i can't pull it up uh he

had been studying category category theoretic models of lambda calculus uh you

know there's a certain adjoint situation that can be used to define what a

function is and what function application is and out of that adjoint situation

in category theory you can derive some equations and then you can interpret

those equations as a kind of abstract machine and so he called that the

categorical abstract machine language or machine and then camel was introduced

as the categorical abstract machine language so there's no connection to ml as

in meta language it's a right so that ml how funny yeah there is a connection i

believe uh but uh another way of explaining the name is categorical abstract

machine language interesting but i think they they like that because it had

both ml and this other mathematical uh interpretation right right and so they

actually you know implemented this uh abstract machine uh derived from these

categorical equations but it was from an engineering point of view it didn't

work very well wasn't performing as well as they would like and so they fell

back at inria they had their own list uh that had been developed at lisp uh by

inria researchers it's called lulisp uh and even spun off into a company but

the lulisp people had uh developed infrastructure such as a runtime system with

garbage collection and a uh kind of low-level abstract machine uh into which

they compiled lisp and uh so after the the categorical abstract machine turned

out to be not so good from an engineering standpoint they adopted the lisp

infrastructure and uh translated the camel surface language into the uh lisp

abstract machine and ran it on the lulisp operating system uh lulisp runtime

system mm-hmm and um that was much better and um that was much better and um

was considered a serious thing because it was commercial being commercialized

mm-hmm better do with things like france lisp and and things like that at the

time various uh interlisp uh france lisp uh lulisp and um um uh but then a guy

named xavi lewar arrived as a young fresh researcher and he was a kind of

wizard programmer and he uh implemented a uh his own implementation of camel

which he called camel special light and because it was much more lightweight

and uh accessible if you like and it beat the pants off of the lulisp

implementation in terms of performance and so he became the kind of lead

developer as camel evolved and he worked at did some research at the language

level and also uh heroic work on the on the implementation and that's the

original origin and then later on a a phd student of dda ramey at inria uh as a

phd project developed an object system on top of camel and they thought that

was uh a neat opportunity for marketing because of the great popularity of

object-oriented programming and so it became whole camel but it was a good

experiment in the sense that the old camel programming community had an option

of using the intrinsic uh functional facilities of old camel as a essentially

an ml dialect or they could use the object-oriented extension and it turned out

nobody used the object-oriented extension and whether that's because it wasn't

a really good object-oriented extension or because the functional model beats

the object-oriented model which is my preferred explanation and so what did you

you were working on an sml implementation at the time what did that compiler

look like in right so we started uh and rappel arrived at princeton in early

1986 and we got together fairly quickly after that and decided to build a